On 11 and 12 September 2017 the Ghent Centre for Digital Humanities organised a conference in the lovely Rubenszaal at the Royal Academies for Sciences and Arts of Belgium in Brussels. The conference aim was to bring together humanists, social scientists and computational scientists who have been working with historical serial texts and/or periodicals, giving everyone involved the opportunity to discuss the progress of current tools and approaches and to think about the theoretical and heuristical limitations and possibilities that came up during research projects. The methods used and the research content that was discussed was very diverse, ranging from NLP to Linked Data and Social Network analysis, to name a few. It would take too far to give an extensive outline of every talk that was presented, so my goal is to highlight a few key points that I took with me from the conferences. If you want to read the abstracts of the presentations, you can find them here.

It’s all a matter of OCR quality. Let’s take it serious1

A first subject that was very present during most of the talks was OCR quality. This is often one of the first hurdles any ‘digital historian’ comes across when trying to analyse their texts, be it periodicals, conference reports or books.

The talk of Thomas D’Haeninck about technics to recognize social reform topics and to create a genealogy of social causes showed that OCR quality really is important to get accurate results, and especially if you want to perform topic modelling or text mining. As D’haeninck showed: out of the enormous amount of congress reports they gathered, a mere 10% was good enough to do topic modelling or text mining. Furthermore, he summarised that the topic modelling didn’t lead to especially new insights, although he was to some extend able to see trends appearing when it came to social charity.

The presentation of Thomas Verbruggen and Thomas D’Haeninck in a later session explored this topic further by looking at digital approach towards the international scope of Belgian socialist newspaper. Text mining and Named Entity Recognition (NER) were used to search for expressions of banal internationalism in two newspapers. The results made clear that running a NER programme isn’t that simple and that this is often a trial and error exercise with different NER packages as the NER used for their experiment had a lot of anomalies.

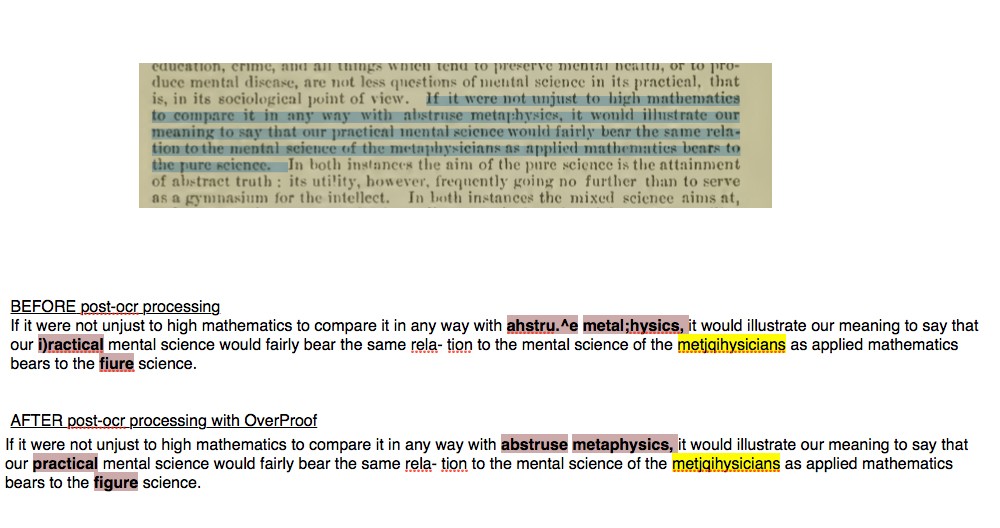

The talk of Manfred Nölte about the journal Die Grenzboten showed again that good OCR and post-OCR processing is important for delivering high quality full texts to researchers. In his talk, he showed that something can be done about OCR mistakes. The Grenzboten project (87 years, 187.000 physical pages) took approximately 2,5 years to digitise the journals (18 months) and to perform post-OCR correcting (12 months). One of the goals of post-OCR processing was to boost its recognition rate from circa 92% to 99%, out of which they reached 98%. To accomplish this, they made for example use of the Australian company ProjectComputing who provides the service OverProof to enhance OCR. On their website, I did a little test, and although the ‘programme’ didn’t recognise every word, it did make some good improvements, as the screenshots below show:

- 1. Quote adapted from a tweet by Masoud Shahnazari on 12 September 2017 https://twitter.com/CyberLiterature/status/907597663352344581.

As my own research will also make use of a big corpus that needs to be OCR’ed, and that I also would like to use text mining, topic modelling or NER, these presentations were a big eye-opener because it made me question the time that I will need to accomplish this and also made me think about the usefulness of such techniques in some cases. It really is something you should consider very careful before diving in to such big endeavour on your own, as these were all collaborative projects.

On the other hand, in the case presented by Mike Kestemont and Thorsten Ries about challenges in stylometry and authorship attribution methods, it seemed that OCR correction wasn’t necessary as the stylometry approach can handle a lot of OCR ‘noise’ before a text can’t be attributed anymore. Also during the talk of Seth van Hooland about Topic Modeling and Word2Vec to explore the Archives of the European Commission more positive results were created by using LDA, as NER didn’t give convincing results.

Getting to the heart of a researcher is letting them choose their own colour, trust me, it works.1

Another aspect that was mentioned, is that our digital approaches don’t always need to be that complicated, and that with simple approaches we can maybe sometimes accomplish a lot more in our research. This was for example the case with the research of François Dominic Laramée who gave a talk about text mining a noisy corpus of Ancien Régime French periodicals. He had to deal with “significant methodological challenges, due in no small part to the unreliable quality of the OCR’ed source material […]”. To tackle some of these spelling mistakes he used a Levenshtein’s algorithm to identify keyword tokens that were corrupted by the OCR and also used manual reconstruction of metadata. Due to these problems, more ‘low key’ digital methods (token counts, metadata analysis and co-occurrence networks) had to be used and were sufficient to produce interesting results.

Related to the importance of simplicity Joris van Eijnatten stressed in his talk ‘In praise of frequency’, that we need simplicity in order to attract researchers into the field of digital humanities, and that “simple counts [could] produce intuitively more convincing results than the complicated 'shock and awe' algorithms often vaunted in digital humanities' projects”.

- 1. Quote from Joris Eijnatten during his presentation, captured in a tweet by Joke Daems on 12 september 2017. https://twitter.com/jokedaems/status/907582089918377984.

Also Kaat Wils argued that simplicity is necessary but she also stressed that we shouldn’t forget that research is always a combination of close and distant reading, and that digital techniques – be they simple word searches or more complex topic modelling – also has its limits and does not necessary tell something about the content or meaning of a text.

People & data

During the discussion panel, Sally Chambers recapped the two-day conference in three words: tools (which is outlined above), people and data. The prominence of these last two topics made clear that preserving, digitising and researching serial publications is a collaborative endeavour. A lot of projects have been launched and a lot effort has been put in digitalisation and digital access as the talks of Manfred Nölte, Jean-Philippe Moreux, Dirk Luyten, Marysa Demoor, Marc D'Hoore and Lotte Wilms proved. Telling during these presentations was the problems that libraries as well as researchers face when it comes to the reasons we digitise content (preserving, researching, or both) as this often gives issues concerning copyright restrictions1, and of course there is also the cost that comes with mass-digitisation and the maintenance of databases and websites. Hopefully in the future there will be (still) more cooperation between IT people, researchers and libraries.

- 1.

This is something that I also mentioned in an earlier blog post: https://www.c2dh.uni.lu/thinkering/digitise-or-not-digitise-question