We got off to a great start in the beautiful museum of natural history with the opening keynote lecture from Diane Jakacki on Digital Humanists as “Jack of all Trades, Master of One” where she spoke about intermethodological collaboration.1 She described the general tensions that Digital Humanists face when they have to apply severall methods and tools. Furthermore they are expected to be fluent in several disciplines, which can create anxiety at the institutional level. But instead of becoming jack of all trades, we can think of digital humanists as polymaths, people of wide ranging knowledge and learning. To illustrate this convergence of disciplines and methods in digital humanists she introduced more than ten different projects across five methods, which I will briefly sum up here.

- Preservation: The great parchment book, Livingstone online

- Text Corpus: Women Writers project, Perseus

- Text Editions: Walt Withman archive, Holinshed project

- Social Networks: Quantifying Kissinger, Six degrees of Francis Bacon

- Mapping: Orbis, Map of Early Modern London, Moravian Lives

- Other: Internet Shakespeare Editions, REED Online

In order to prevent our projects from becoming siloed and isolated from others, we should work together from the beginning in order to prevent research becoming obsolete and instead collaborate to create sustainable projects. Furthermore, we also need to think about the interoperability or interchangeability between projects by collaborating with specialists from the inception in a horizontal structure instead of a centralised structure.

- 1. Diane Jakacki, “Jack of all Trades, Master of One: the Promise of Intermethodological Collaboration” (opening keynote, Digital Humanities at Oxford Summer School, Oxford, July 3-7, 2017). Podcast: http://podcasts.ox.ac.uk/2017-opening-keynote-jack-all-trades-master-one-promise-intermethodological-collaboration.

After the morning coffee break, the workshop sessions started and I attended the Linked Open Data strand presented by Dr Terhi Nurmikko-Fuller and John Pybus. Now for those of you who have never heard of Linked Open Data (LOD) as a method or the Semantic Web on which it is published, let me give a brief description of what Tim Berners-Lee envisioned:

“The Semantic Web is a vision: the idea of having data on the Web defined and linked in a way that it can be used by machines not just for display purposes, but also for automation, integration and reuse of data across various applications.”1

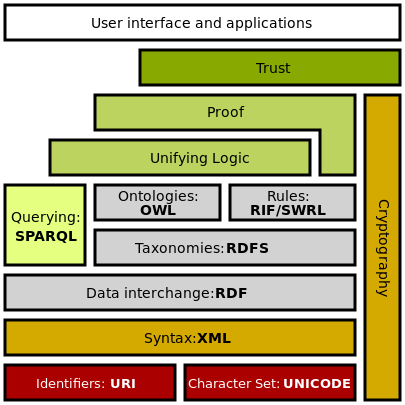

In order to achieve this vision in real life the semantic web is based on the Resource Description Framework (RDF) consisting of triples that contain a subject (i.e. William Shakespeare), a predicate or property (i.e. is a) and an object or value (i.e. Playwright). Each element of a triple can be a Uniform Resource Identifier (URI) which can link to a URL on the web. In order to created linked open data sets, you need several tools from the semantic web stack visualised here:

On Monday we were introduced to the basics of RDF, a means of encoding data that is machine-readable and self-describes meaning. Main reasons for using RDF instead of a regular relational database or XML are that RDF is flexible since it can store any connection between nodes, the data is connected into a graph instead of tables, and the URIs enable data to be combined using web technology. You can express RDF either in RDF/XML, JSON-LD, or Turtle (we were a fan of Turtle, because it’s much more human-readable), but you can switch languages in an online tool such as easyRDF. Once you have your RDF triples, you can store them in a triplestore, such as OpenLink Virtuoso, but you should compare a few to see which one suits your project. Tuesday was all about ontologies and OWL’s – pun intended - (Web Ontology Languages), a description of concepts used to build semantic models with RDF adding meaning to data which can be reused by others. Best practice is to start from existing and well-known ontologies so that other can link to your data, but for more specialised concepts, you might need to build your own.

- Useful ontologies: RDF Schema, XSD, Dublin Core, SKOS, FOAF

- Cultural Heritage: CIDOC-CRM, FRBRoo, PerioDO, Europeana Data Model

- Bibliographical metadata: MODS/RDF, MADS/RDF, Bibframe, BiBO, Schema.org

- Other: Timeline Ontology

In an RDF Schema you can define classes of resources, define properties, and add subclasses where a property can define a domain and a range, the domain being the class of the subject and the range being the class of the object. Now this all sounds quite complicated, but for people who built databases before, you can consider the ontology as an Entity – Relationship model showing the structure of your dataset (with fewer limits). See also my previous blogpost on the limitations of data. The easiest way to design an ontology is to start with good old pen and paper (and colour, lots of colour) and it's only once you have a structure in mind, that you can start designing your ontology in a tool such as protégé.

- 1. V. Kashyap, C. Bussler, and M. Moran. 2008. The Semantic Web: Semantics for Data and Services on the Web. Data-Centric Systems and Applications. Springer Berlin Heidelberg, 2008, 3.

On Wednesday we were introduced to triple stores and we learned how to query existing data using the SPARQL Protocol and RDF Query Language or simply SPARQL. This query language is used to pull values from structured and semi-structured data, exploring datasets by looking at unknown relationships and so on.1 You can use an endpoint such as Virtuoso to write and run your queries.

After these important introductions we applied everything we learned to the collection of the British Museum on Thursday exploring the beta-version of their research space where you can reconstruct and reunify cultural heritage objects in a click-through environment. On Friday we got to know a few other use cases, such as Graham Klyne's tool for prototyping your information model, or creating small datasets, called annalist. The final example we were introduced to was Pelagios, which is mostly interesting for annotating texts and linking location names to geodata, both modern geodata and geodata on ancient cities.

Clearly, the semantic web already contains many linked open data collections, which are nicely visualised on the website of the Linking Open Data cloud. This cloud has grown immensely over the last few years as you can see by comparing these visualisations.

- 1. Bob DuCharme, “Learning SPARQL: querying and updating with SPARQL 1.1,” (O’Reilly, 2013)

During the workshop sessions we also had some lectures by other researchers, such as professor Andrew Meadows who introduced nomisma.org on Monday, which contains an ontology for and set of linked open data with regards to numismatic concepts, mostly on Greek and Roman coins. Another workshop lecture worth mentioning was by emeritus professor Donna Kurtz who is working on several projects making museum collections from all over the world available online. Not only did she advise people in academia working on projects to be visionary, determined, public oriented, and above all persistent, but also:

Luckily this workshop lecture on Wednesday made up for the disappointing and controversial morning lecture by professor Ralph Schroeder and Laird Barrett on “Big Data and the Humanities: How digital research, computational techniques and big data contribute to knowledge”.1 With statements such as 'science just works' and 'data is objective', he caused quite the controversy. But since I cannot possibly formulate my opinion on these controversial statements in the span of this blogpost, it will have to wait until later. I do invite others to take a look at the lecture and formulate your own opinion and perhaps think about what humanities scholars bring to big data (instead of what big data offers us). The closing keynote by professor Andrew Prescott had a little more on offer as he was discussing “What Happens When the Internet of Things Meets the Humanities?”2 The techno-utopia view of the Internet of Things explained in this video can seem controlling and intrusive, it challenges privacy and identity and encourages commercial use of data using hidden algorithms altering reality.

- 1. Ralph Shroeder, and Laird Barrett, “Big Data and the Humanities: How digital research, computational techniques and big data contribute to knowledge” (lecture, Digital Humanities at Oxford Summer School, Oxford, July 3-7, 2017). Podcast: http://podcasts.ox.ac.uk/big-data-and-humanities.

- 2. Andrew Prescott, “What Happens When the Internet of Things Meets the Humanities?” (closing keynote, Digital Humanities at Oxford Summer School, Oxford, July 3-7, 2017). Podcast: http://podcasts.ox.ac.uk/2017-closing-keynote-what-happens-when-internet....

Altogether, the Internet of Things can seem dehumanising, whereas professor Prescott believes it can reengage us with the materiality of the world around us, escaping the anonymity of the data deluge. Digital Humanities can contribute to the Internet of Things by making it accessible, grounded in communities and can help in making us feel more, not less, human. He demonstrated some of the possibilities based on the yourfry.com project where anyone interested was invited to make their own interpretation and reinvention of Steven Fry’s book.1 These projects prove that Digital Humanities does not have to be rectangular, it can reimagine materials such as ink and paper, create new ways of engaging with the material culture, and participate in the pull economy as creators, not consumers, and thus facilitate a thinking through making methodology. Some of the technologies discussed were conductive ink, ultra thin touchscreen displays, art-codes (similar to QR codes) and electronic tattoos.

- 1. Videos of some of the projects demonstrated:

Sarah Weingold, Book of Bipolarity: https://youtu.be/s9eoUycIk5M.

Cathy Horton, Interactive Shipping Forecast Map: https://youtu.be/HMGSQ8ESZRA.

Mike Shorter, Jon Rogers, A Touch of Fry: https://youtu.be/HMGSQ8ESZRA.